The concern around GPU shortages and how these could impact the AI revolution

The AI revolution gained significant momentum with OpenAI's release of ChatGPT in November of last year. While it's evident that AI has the potential to profoundly transform various aspects of our lives, a significant obstacle currently hampers its progress - the availability of computational resources, particularly cutting-edge GPUs.

So, what are GPUs, and why are they crucial?

AI fundamentally involves solving complex mathematical problems, often on an enormous scale. Just as a calculator is necessary to solve mathematical problems, AI relies on powerful computational resources, commonly known as compute. Without sufficient compute, AI cannot thrive.

While various types of computational resources can be used for AI, GPUs (Graphical Processing Units) dominate for tasks requiring substantial computing power. Businesses running intensive AI models, such as language models, or those with low-latency requirements, necessitate GPU-based inferencing.

How Is GPU Demand Evolving?

-

Pre-training Large Language Models (LLMs):

The training of Large Language Models (LLMs) is renowned for its intensive compute demands. For instance, training GPT-3, the foundational model behind ChatGPT, consumed an estimated 1,287 Gigawatt hours of electricity, equivalent to the annual consumption of 120 US homes.

This demanding task relies on extensive GPU clusters, and discussions on GPU demand frequently centre on this aspect. However, this high GPU demand primarily pertains to the training phase, which occurs only sporadically in a few companies. Once trained, LLMs can be utilised across myriad applications, effectively distributing the training cost among numerous users. Therefore, the per-business GPU requirement becomes a relatively small fraction.

-

Commercial Fine-tuning and Inferencing LLMs:

The most significant growth in GPU demand is occurring in the commercial training and inferencing of LLMs. With the advent of highly capable AI and mature LLMs, businesses across the spectrum are eager to integrate AI applications. This trend is evident in the rapid proliferation of OpenAI-compatible solutions following the release of ChatGPT.

In the envisioned future, our interaction with LLMs will become ubiquitous, ranging from predictive text to auto-transcription. Meeting this level of adoption will demand an immense compute capacity.

This surging demand is already straining resources. OpenAI's premium version of ChatGPT, which guarantees consistent uptime, has experienced intermittent unavailability due to overwhelming demand and, presumably, insufficient compute resources. If this is occurring at this early stage of the AI evolution, one can only imagine the challenges in the months and years ahead as usage continues to soar.

What will be the impact?

The exponential growth in GPU demand is far outpacing supply, leading to widespread GPU shortages. This presents two major issues:

- Exclusivity of AI: Insufficient supply often leads to substantial price hikes, restricting AI adoption to high-value use cases where benefits significantly outweigh costs. While this isn't inherently negative, it can stifle innovation. Furthermore, it concentrates AI's benefits in the hands of the wealthiest corporations, exacerbating the power imbalance in the AI landscape.

- Reduced Efficiency: The consequences of this shortage are already visible, with models and requests exceeding the hardware capacity allocated to services, resulting in slower performance and increased costs. These inefficiencies have a cascading effect on AI applications, making them prone to glitches and slowdowns.

Neither of these outcomes aligns with the desired future of AI.

What can be done?

Fortunately, numerous strategies can mitigate our reliance on costly GPUs:

- Select Appropriate Models: While powerful AI models like GPT-4 have their place, many use cases can achieve comparable or superior performance with smaller, resource-efficient models fine-tuned on high-quality data.

- Model Compression and Hardware Optimization: Although these techniques are often confined to research labs, TitanML, through its Takeoff Inference Server, is democratising AI and machine learning deployment. This server enables companies to use more affordable GPUs, with some clients reporting over 90% reductions in compute costs and 2000% latency improvements within hours of deployment. TitanML has also achieved real-time deployment of state-of-the-art Falcon LLM on commodity CPUs, a feat recognised by the industry, offering customers an even wider range of solutions.

Conclusion

Over-reliance on scarce GPUs remains a pressing issue, and it may worsen before showing signs of improvement. Nonetheless, a wealth of best practices can reduce compute consumption when deploying AI, improving latencies, and reducing costs. Addressing this challenge is pivotal to realizing the full potential of the AI revolution, and it's a mission we are committed to at TitanML.

For more details about TitanML, please visit: titanml.co

techUK – Unleashing UK Tech and Innovation

The UK is home to emerging technologies that have the power to revolutionise entire industries. From quantum to semiconductors; from gaming to the New Space Economy, they all have the unique opportunity to help prepare for what comes next.

techUK members lead the development of these technologies. Together we are working with Government and other stakeholders to address tech innovation priorities and build an innovation ecosystem that will benefit people, society, economy and the planet - and unleash the UK as a global leader in tech and innovation.

For more information, or to get in touch, please visit our Innovation Hub and click ‘contact us’.

Latest news and insights

Other forms of content

Sprint Campaigns

techUK's sprint campaigns explore how emerging and transformative technologies are developed, applied and commercialised across the UK's innovation ecosystem.

Activity includes workshops, roundtables, panel discussions, networking sessions, Summits, and flagship reports (setting out recommendations for Government and industry).

Each campaign runs for 4-6 months and features regular collaborations with programmes across techUK.

techUK's latest sprint campaign is on Robotics & Automation technologies. Find out how to get involved by clicking here.

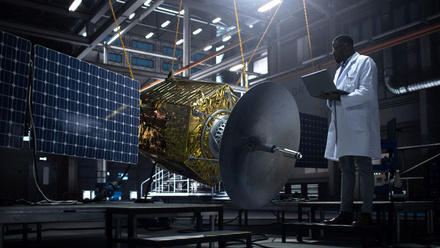

Running from September to December 2023, this sprint campaign explored how the UK can lead on the development, application and commercialisation of space technologies, bring more non-space companies into the sector, and ultimately realise the benefits of the New Space Economy.

These technologies include AI, quantum, lasers, robotics & automation, advanced propulsion and materials, and semiconductors.

Activity has taken the form of roundtables, panel discussions, networking sessions, Summits, thought leadership pieces, policy recommendations, and a report. The report, containing member case studies and policy recommendations, was launched in March 2024 at Satellite Applications Catapult's Harwell campus.

Get in touch below to find out more about techUK's ongoing work in this area.

Event round-ups

Report

Insights

Get in touch

Running from January to May 2024, this sprint campaign explored how the UK can lead on the development, application and commercialisation of the technologies set to underpin the Gaming & Esports sector of the future.

These include AI, augmented / virtual / mixed / extended reality, haptics, cloud & edge computing, semiconductors, and advanced connectivity (5/6G).

Activity took the form of roundtables, panel discussions, networking sessions, Summits, and thought leadership pieces. A report featuring member case studies and policy recommendations was launched at The National Videogame Museum in November 2024.

Get in touch below to find out more about techUK's future plans in this space.

Report

Event round-ups

Insights

Get in touch

Running from July to December 2024, this sprint campaign explored how the UK can lead on the development, application and commercialisation of web3 and immersive technologies.

These include blockchain, smart contracts, digital assets, augmented / virtual / mixed / extended reality, spatial computing, haptics and holograms.

Activity took the form of roundtables, workshops, panel discussions, networking sessions, tech demos, Summits, thought leadership pieces, policy recommendations, and a report (to be launched in 2025).

Get in touch below to find out more about techUK's future plans in this space.

Event round-ups

Insights

Get in touch

Running from February to June 2025, this sprint campaign is exploring how the UK can lead on the development, application and commercialisation of robotic & automation technologies.

These include autonomous vehicles, drones, humanoids, and applications across industry & manufacturing, defence, transport & mobility, logistics, and more.

Activity is taking the form of roundtables, workshops, panel discussions, networking sessions, tech demos, Summits, thought leadership pieces, policy recommendations, and a report (to be launched in Q4 2025).

Get in touch below to get involved or find out more about techUK's future plans in this space.

Upcoming events

Insights

Event round-ups

Get in touch

Campaign Weeks

Our annual Campaign Weeks enable techUK members to explore how the UK can lead on the development and application of emerging and transformative technologies.

Members do this by contributing blogs or vlogs, speaking at events, and highlighting examples of best practice within the UK's tech sector.

Summits

Tech and Innovation Summit 2025

Tech and Innovation Summit 2023

Tech and Innovation Summit 2024

Receive our Tech and Innovation insights

Sign-up to get the latest updates and opportunities across Technology and Innovation.