Intelligent compute: a recipe for sustainable and cost-effective growth (Guest blog from Cambridge Consultants)

Author: Karen Balbi, Sustainable Innovation - Digital Specialist, Cambridge Consultants

In today’s fast-paced digital landscape, businesses are increasingly turning to cloud computing to streamline operations, enhance scalability, and drive innovation. However, this is resulting in a data crisis with everything being stored in the cloud, regardless of whether there is value associated to the cost or how much energy is consumed in the process.

This calls upon a new wave of advanced AI algorithms at the edge to ensure data volumes are minimised and new approaches to scheduling resource heavy compute. In this article, I explore the critical roles of both in making businesses more efficient and reducing carbon emissions to promote sustainable growth.

Resolving the data storage crises

In the ever-evolving landscape of data-driven decision-making, a fundamental principle stands out: data doesn’t inherently translate to value. In fact, sometimes less data yields more valuable insights. There are two areas of particular focus that must be considered.

Firstly, rather than indiscriminately storing and processing data in the cloud, consider a more discerning approach. Advances in edge compute - where computation occurs closer to the data source - can be a game-changer. By analysing data at the edge, we reduce latency, enhance real-time responsiveness, and minimize unnecessary data transfers. This reduces environmental impact and costs, whilst encouraging businesses to prioritize insights over large amounts of raw data. Once data is processed, the raw data can be discarded at the edge, thus removing the need to build devices with lots of memory, which would simply move the environmental impact to the edge.

This then frees you up to reserve the cloud for extracting and processing actionable insights that power informed decisions. By avoiding drowning in an ocean of data you can focus on extracting meaningful patterns and trends to uncover hidden correlations. Adopting a service design approach can bring significant value to this aspect, enabling businesses to clearly define the value products bring and the specific insights required, minimising the transmission/storage of unnecessary data.

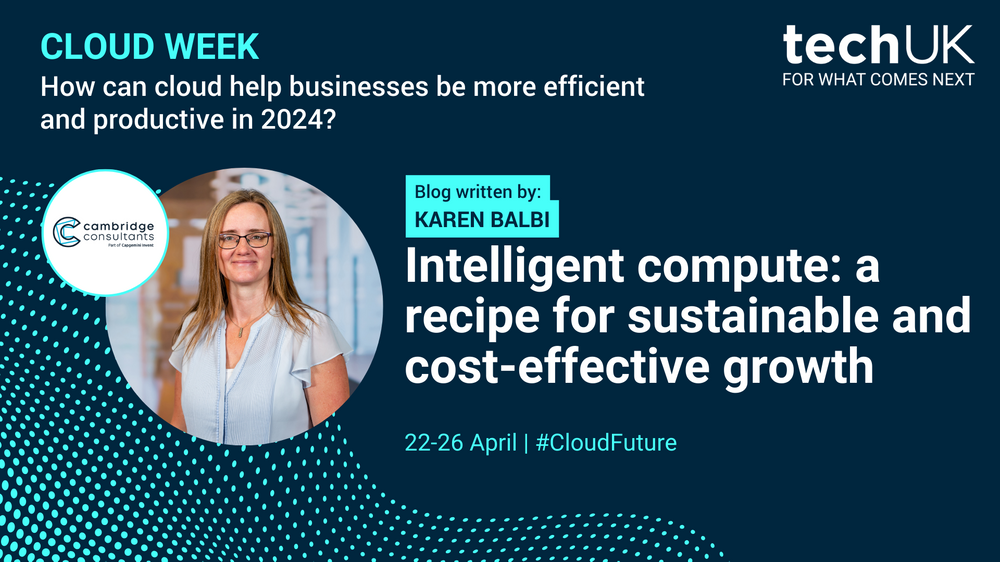

The following image visualises this approach and demonstrates the use cases it enables.

Image 1: Data volumes

This approach can enable real-time monitoring of manufacturing equipment or predictive maintenance for vehicles - all happening at the edge. Armed with insights, organizations can fine-tune processes, allocate resources efficiently, and respond swiftly to changing conditions. Without edge compute, the volumes of data from autonomous vehicles could be 4TB a day, which results in an estimated 300TB a year and $350,000* a year in cost for cloud storage alone. Communication costs would need to be added to this, according to the chosen mechanism.

*Data volume and cost estimated by Intel (at Amazon’s 2016 rates)

Optimising AI to run at the edge

The value of moving more processing to the edge is clear. However, to enable this, we must radically innovate AI algorithms that can run on devices, increasing performance, and operating at low power. Here’s how:

- Lightweight Models: Develop compact models that require fewer resources. Techniques like quantization (reducing precision) and pruning (removing unnecessary weights) help achieve this.

- Model Compression: Use techniques like knowledge distillation to transfer knowledge from a large model to a smaller one.

- On-Device Inference: Optimize inference processes to run efficiently on edge devices. Techniques include model quantization, JIT (just-in-time) compilation, and hardware-specific optimizations.

- Federated Learning: Train models collaboratively across edge devices while keeping data decentralized. This minimizes data transfer to the cloud.

At Cambridge Consultants, we are implementing new algorithms on low-power chips with a view to not just putting AI on the edge but bringing learning to the edge for our clients. It has the capability, like you do, to update its view of the environment and adapt, providing a more humancentric notion of AI.

Reducing cloud footprint through scheduled compute

By shifting from on-premises infrastructure to the cloud, businesses gain agility, scalability, and cost savings of up to 66%. Businesses can go much further, drastically reducing the carbon footprint associated to high computational loads.

By optimizing cloud resources, businesses indirectly reduce their carbon footprint. The relationship between costs and associated footprint is often complex. It varies according to the type of energy sources per geographic region, availability of renewables and percentage of offsetting emissions which impact reporting.

Companies should consider which regions to use, as the usage of renewables varies greatly per location and therefore associated carbon footprint. Companies like Electricity Maps provide real-time carbon intensity data for electricity consumed (gCO₂eq/kWh) per region. Use this to increase the level of visibility and to inform this decision-making process.

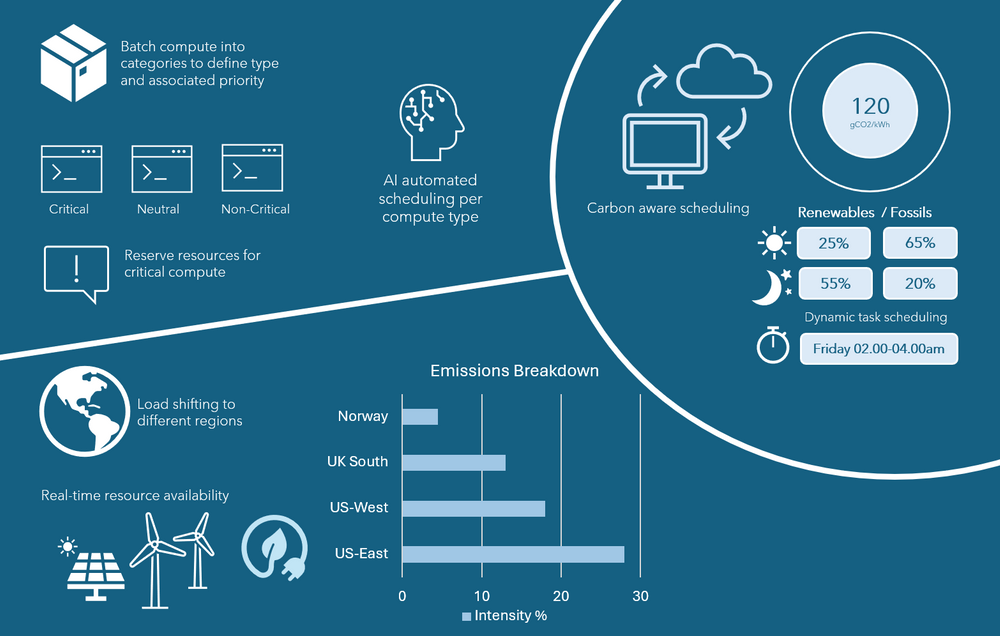

Another aspect to consider is strategic compute allocation and scheduling. By discerning the type of compute needed and its criticality, we empower AI systems to automatically schedule non-critical compute tasks to occur during optimal periods. The result is reduced carbon emissions and cost savings. The following image illustrates the concept of how to strategically allocate compute resources.

Image 2: Carbon aware scheduling

In this example, the optimal time of day to schedule the compute to run is Friday at 2am in Norway. At Cambridge Consultants we have tools to make the emissions and costs visible to the development team, to inform the architectural decisions we make for our clients. Continuous visibility of the impact of each feature, in terms of compute in use and data stored, enables developers to optimise them and operate them in a sustainable way.

Efficiency, growth, responsibility

Cost control and intelligent compute are not just buzzwords - they are essential components of sustainable cloud and business strategies. Advances in edge compute are significantly reducing the data that needs to be stored and paid for, whilst scheduling compute enables organisations to make greener energy choices.

Cambridge Consultants is excited to lead the development of deep tech innovation in areas such as deep learning, edge AI and low power compute, working with clients to create a more sustainable future and propel business growth.

Cloud computing and the path to a more sustainable future

This techUK insights paper highlights the commitment of our members to a sustainable approach to cloud computing and sets out six core best practice principles for a greener future for the tech sector.